Experiment plays a huge role in modern science. All new technical discoveries are due precisely to experiment. What difficulties arise when conducting an experiment, as well as what are the methods for conducting it, will be discussed in this article.

Nowadays, no scientific or technical research can do without experiments. Experimentation is necessary in the field of applied sciences, as well as in the development of new science. Thus, technological progress requires it.

Problems of the experiment

Thanks to technological progress, the experimental engineer faces new challenges. One of them is that the parameters of the test objects that need to be determined are often not directly measurable (durability, corrosion resistance, etc.). That is, the set of technical and economic indicators by which the test object is assessed does not coincide, in most cases, with the set of object parameters determined based on the results of a full-scale experiment.

Another problem is the ability to organize tests of objects whose processes are characterized by complex dynamics and are prone to the effects of variable environmental conditions.

When testing complex systems, the importance of taking into account the impacts that the test recording and control equipment has on the very process of functioning of the tested object increases.

Therefore, the main principle of organizing an experiment in modern conditions is a systematic approach.

The systems approach involves considering all the means involved in the experiment as a single system described by the appropriate mathematical model. Thus, the mathematical model becomes an element of testing, which is built after the implementation, planning of the experiment, its conduct and processing of the result. Only the presence of relationships connecting the required technical and economic characteristics of the test object with its parameters makes it possible to obtain informed judgments about the list of necessary test activities and their rational sequence, the set of recorded values, the conditions for measurement accuracy, registration frequency, etc.

To build a mathematical model, it is necessary to have an understanding of the behavior of individual elements, the interaction between them, the influence of various factors, as well as the reaction to changes in test conditions.

Methods

What can unite engineers, physicists, biologists, sociologists and other specialists? Biologists test medicinal devices on animals, clone, engineers conduct scientific research, test various materials, while a sociologist collects and processes information. Each specialist has his own path, the only thing that unites them is experiments.

There are still many common features in the way experiments are carried out in different industries:

1. All researchers pay attention to the accuracy of measuring instruments and the accuracy of the data obtained.

2. Every researcher tries to minimize the number of variables involved in the experiment, since his work will be completed faster and incur less cost.

3. The experiment can be of any complexity, but the first thing you need to do is write a plan for its implementation. When constructing an experimental plan, it is very important to formulate questions correctly and clearly.

4. During the experiment, the researcher must check the test object for errors and malfunctions. This task involves checking the acceptability of the data obtained. The results should not contradict logic.

5. During any experiment, you need to analyze the data obtained and give an explanation for them, because without this point the experiment will not make sense.

6. All researchers control the experiment being conducted, that is, dependence on external variables can be omitted.

The nature of the experiments may well differ from each other, but the planning, execution and analysis of all experiments must be carried out in the same sequence. The results of experiments are displayed, as a rule, in the form of tables, graphs, and formulas. But the difference is in the quality of the experiment.

Each experiment ends with the presentation of the result, the formulation of the conclusion and the issuance of a recommendation. To obtain the dependence of the result on several parameters, it is necessary to construct several graphs, or to construct a graph in isometric coordinates. It is not yet possible to depict highly complex functions using graphs. By displaying the results in the form of mathematical formulas, it is possible to express the dependence of the result on a larger number of variables. But still, as a rule, they are limited to 3 variables.

Outputting the results of an experiment in verbal form is the most ineffective.

At the end of most technical experiments there is some action - making a decision, continuing the test, or admitting failure.

The researcher must methodically and thoroughly consider all likely external influences and optimal control methods. He must be able to distinguish an exceptional and special effect from a variety of extraneous influences and external error factors.

Random discoveries appear when all foreseen possibilities have been calculated, predicted, or eliminated in advance, and only completely new, previously unexplored possibilities can open up.

Methodology- this is the total and. mental and physical operations placed in a certain sequence, in accordance with which the goal of the study is achieved.

When developing experimental methods, it is necessary to provide for:

Conducting preliminary targeted observation of the object or phenomenon being studied in order to determine the initial data (hypotheses, selection of varying factors);

Creation of conditions in which experimentation is possible (selection of objects for experimental influence, elimination of the influence of random factors);

Determination of measurement limits; systematic observation of the development of the phenomenon being studied and accurate descriptions of the facts;

Carrying out systematic recording of measurements and assessments of facts by various means and methods;

Creation of repeating situations, changing the nature of conditions and cross-impacts, creating complicated situations in order to confirm or refute previously obtained data;

The transition from empirical study to logical generalizations, to analysis and theoretical processing of the received factual material.

Before each experiment, a plan (program) is drawn up, which includes:

The purpose and objectives of the experiment;

Selection of varying factors;

Justification of the scope of the experiment, number of experiments;

The procedure for implementing experiments, determining the sequence of changes in factors;

Choosing a step for changing factors, setting intervals between future experimental points;

Justification of measuring instruments;

Description of the experiment;

Justification of methods for processing and analyzing experimental results.

Experimental results must meet three statistical requirements:

The requirement for the effectiveness of assessments, i.e. minimum variance of deviation relative to an unknown parameter;

The requirement for consistency of assessments, i.e. as the number of observations increases, the parameter estimate should tend to its true value;

The requirement for unbiased estimates is the absence of systematic errors in the process of calculating parameters.

The most important problem in conducting and processing an experiment is the compatibility of these three requirements.

Elements of the theory of experimental design

The mathematical theory of experiment determines the conditions for optimal research, including in the case of incomplete knowledge of the physical essence of the phenomenon. For this purpose, mathematical methods are used in preparing and conducting experiments, which makes it possible to study and optimize complex systems and processes, ensure high efficiency of the experiment and accuracy in determining the factors under study.

Experiments are usually carried out in small series according to a pre-agreed algorithm. After each small series of experiments, the observation results are processed and a strictly informed decision is made on what to do next.

When using methods of mathematical experimental planning it is possible:

Solve various issues related to the study of complex processes and phenomena;

Conduct an experiment in order to adapt the technological process to changing optimal conditions for its occurrence and thus ensure high efficiency of its implementation, etc.

The theory of mathematical experiment contains a number of concepts that ensure the successful implementation of research tasks:

The concept of randomization;

Concept of sequential experiment;

Concept of mathematical modeling;

The concept of optimal use of factor space and a number of others.

Randomization principle is that an element of randomness is introduced into the experimental design. To do this, the experimental plan is drawn up in such a way that those systematic factors that are difficult to control are taken into account statistically and then excluded in the research as systematic errors.

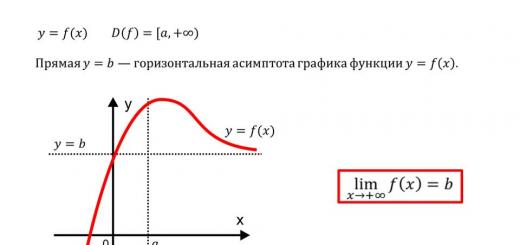

When carried out sequentially the experiment is not carried out simultaneously, but in stages, so that the results of each stage are analyzed and a decision is made on the advisability of further research ( Fig.2.1 ). As a result of the experiment, a regression equation is obtained, which is often called a process model.

For specific cases mathematical model is created based on the target orientation of the process and research objectives, taking into account the required accuracy of the solution and the reliability of the source data.

An important place in the theory of experimental planning is occupied by optimization issues processes under study, properties of multicomponent systems or other objects.

As a rule, it is impossible to find such a combination of values of influencing factors that simultaneously achieves the extremum of all response functions. Therefore, in most cases, only one of the state variables, the response function characterizing the process, is chosen as the optimality criterion, and the rest are accepted as acceptable for the given case.

Methods for planning experiments are currently developing rapidly, facilitated by the possibility of widespread use of computers.

Computational experiment refers to the methodology and technology of research based on the use of applied mathematics and electronic computers as a technical basis for the use of mathematical models.

Thus, a computational experiment is based on the creation of mathematical models of the objects under study, which are formed using some special mathematical structure that can reflect the properties of the object that it exhibits under various experimental conditions.

However, these mathematical structures turn into models only when the elements of the structure are given a physical interpretation, when a relationship is established between the parameters of the mathematical structure and the experimentally determined properties of the object, when the characteristics of the elements of the model and the model itself as a whole correspond to the properties of the object.

Thus, mathematical structures, together with a description of the correspondence to the experimentally discovered properties of the object, are a model of the object being studied, reflecting in a mathematical, symbolic (sign) form the dependencies, connections and laws that objectively exist in nature.

Each computational experiment is based both on a mathematical model and on the techniques of computational mathematics. Modern computational mathematics consists of many sections developing along with the development of electronic computing technology.

Based on mathematical modeling and methods of computational mathematics, the theory and practice of computational experiments were created, the technological cycle of which is usually divided into the following stages.

1. For the object under study, a model is built, usually first a physical one, which captures the division of all factors operating in the phenomenon under consideration into main and secondary ones, which are discarded at this stage of the study.

2. A method for calculating the formulated mathematical problem is being developed. This problem is presented in the form of a set of algebraic formulas, according to which calculations and conditions must be carried out, showing the sequence of application of these formulas; a set of these formulas and conditions is called a computational algorithm.

A computational experiment is multivariate in nature, since solutions to posed problems often depend on numerous input parameters.

In this regard, when organizing a computational experiment, you can use effective numerical methods.

3. An algorithm and program for solving the problem on a computer are being developed. Programming solutions is now determined not only by the art and experience of the performer, but is growing into an independent science with its own fundamental approaches.

4. Carrying out calculations on a computer. The result is obtained in the form of some digital information, which will then need to be decrypted. The accuracy of information is determined during a computational experiment by the reliability of the model underlying the experiment, the correctness of algorithms and programs (preliminary “test” tests are carried out).

5. Processing of calculation results, their analysis and conclusions. At this stage, there may be a need to clarify the mathematical model (complicating or, conversely, simplifying), proposals for creating simplified engineering solutions and formulas that make it possible to obtain the necessary information in a simpler way.

A computational experiment acquires exceptional importance in cases where full-scale experiments and the construction of a physical model turn out to be impossible.

There are many areas in science and technology in which a computational experiment is the only possible one in the study of complex systems.

General principles of experimental design

Comparison.

Randomization.

Replication.

Uniformity.

Stratification.

factor levels

Title: General principles of experimental design

Detailed description:

Since its inception, science has been looking for ways to understand the laws of the surrounding world. Making one discovery after another, scientists rise higher and higher on the ladder of knowledge, erasing the boundary of the unknown and reaching new frontiers of science. This path lies through experiment. By consciously limiting the infinite diversity of nature within the artificial framework of scientific experience, we transform it into a picture of the world understandable to the human mind.

Experiment as scientific research is the form in which and through which science exists and develops. The experiment requires careful preparation before it is carried out. In biomedical research, design of the experimental part of the study is especially important due to the wide variability of properties characteristic of biological objects. This feature is the main reason for the difficulties in interpreting the results, which can vary significantly from experiment to experiment.

Statistical problems justify the need to choose an experimental design that would minimize the influence of variability on the scientist's conclusions. Therefore, the goal of experimental design is to create the design necessary to obtain as much information as possible at the least cost to complete the study. More precisely, experimental planning can be defined as the procedure for selecting the number and conditions for conducting experiments that are necessary and sufficient to solve a given problem with the required accuracy.

Experimental design originated in agrobiology and is associated with the name of the English statistician and biologist Sir Ronald Aylmer Fisher. At the beginning of the 20th century, at the agrobiological station in Rothamsted (UK), studies began on the effect of fertilizers on the yield of various varieties of grain. Scientists were forced to take into account both the great variability of the research objects and the long duration of the experiments (about a year). Under these conditions, there was no other way but to develop a well-thought-out experimental design to reduce the negative impact of these factors on the accuracy of the conclusions. By applying statistical knowledge to biological problems, Fisher came to develop his own principles of statistical inference theory and pioneered the new science of design and analysis of experiments.

Ronald Fisher himself explained the basics of planning using the example of an experiment carried out to determine the ability of a certain English lady to distinguish what was poured into a cup first - tea or milk. It should be noted that for real English ladies it is important that tea is poured into milk, and not vice versa, a violation of the sequence will be a sign of ignorance and will spoil the taste of the drink.

The experiment is simple: the lady tries tea with milk and tries to understand by taste in what order both ingredients were poured. The design developed for this study has a number of properties.

Comparison. In many studies, precise determination of the measurement result is difficult or impossible. So, for example, a lady will not be able to quantify the quality of tea; she will compare it with the standard of a properly prepared drink, the taste of which has been familiar to her since childhood. Typically, in a scientific experiment, an object is compared either with some predetermined standard or with a control object.

Randomization. This is a very important point in planning. In our example, randomization refers to the order in which the cups are presented for tasting. Randomization is necessary to enable the use of statistical methods to analyze study results.

Replication. Repeatability is a necessary component of setting up an experiment. It is unacceptable to draw conclusions about the ability to determine the quality of tea from just one cup. The result of each individual measurement (tasting) carries with it a share of uncertainty arising under the influence of many random factors. Therefore, multiple tests must be performed to identify the source of variability. The sensitivity of the experiment is associated with this property. Fisher noted that until the number of cups of tea exceeds a certain minimum, it is impossible to draw any clear conclusions.

Uniformity. Although it is necessary to repeat measurements (replication), their number should not be too large so that homogeneity is not lost. Temperature differences between cups, dulling of taste, etc., when a certain limit number of repetitions is exceeded, can make it difficult to analyze the results of the experiment.

Stratification. Going beyond the example of R. Fischer to a more abstract description of the experimental design, one can additionally indicate such a property as stratification (blocking). Stratification is the distribution of experimental units into relatively homogeneous groups (blocks, layers). The stratification procedure allows us to minimize the effect of non-random sources of variability known to us. Within each block, the experimental error is assumed to be smaller compared to the option with random selection for the experiment of the same number of objects. For example, when researching a new drug, we have two levels of factor - “drug” and “placebo”, which are prescribed to men and women. In this case, gender is a blocking factor by which the subjects being studied are divided into subgroups.

The characteristics of an experimental design described above apply in whole or in part to any scientific experiment. However, to get started, knowledge about the general properties of the study is not enough; more thorough preparation is required. It is impossible to create a detailed guide in one article, so the most general information about the stages of experiment planning will be presented here.

Any research begins with setting a goal. The choice of problem to study and its formulation will influence both the design of the study and the conclusions that will be drawn from its results. In the simplest case, the problem statement should involve the questions “Who?”, “What?”, “When?”, “Why?” And How?".

An illustration of the importance of this planning stage is a study that collected information on road traffic accidents. Depending on the goal setting, work can be aimed at developing a new car or a new road surface. Although the same data set is used, the problem statement and conclusions differ significantly depending on the problem formulation.

After choosing the goal of the work, the so-called dependent variables should be determined. These are the variables that will be measured in the study. For example, indicators of the functioning of certain systems of the human body or laboratory animals (heart rate, blood pressure, enzyme content in the blood, etc.), as well as any other characteristics of research objects, changes in which will be informative for us.

Since there are dependent variables, there must also be independent variables. Another name for them is factors. The researcher operates with factors in an experiment. This could be the dose of the drug being studied, the level of stress, the degree of physical activity, etc. The relationship between the factor and the dependent variable is conveniently represented using a cybernetic system, often called a “black box”.

A black box is a system whose operating mechanism is unknown to us. However, the researcher has information about what happens at the input and output of the black box. In this case, the output state functionally depends on the input state. Accordingly, y1, y2, ..., yp are dependent variables, the value of which depends on factors (independent variables x1, x2, ..., xk). The parameters w1, w2, ..., wn represent disturbances that cannot be controlled or change over time.

In general, this can be written as follows: y=f(x1, x2, ..., xk).

Each factor in an experiment can take one of several values. Such values are called factor levels. It may turn out that a factor can take on an infinite number of values (for example, the dose of a drug), but in practice several discrete levels are selected, the number of which depends on the objectives of a particular experiment.

A fixed set of factor levels determines one of the possible states of the black box. At the same time, these are the conditions for conducting one of the possible experiments. If we enumerate all possible sets of such states, we will obtain a complete set of different states of a given system, the number of which will be the number of all possible experiments. In order to calculate the number of possible states, it is sufficient to raise the number of factor levels q (if it is the same for all factors) to the power of the number of factors k.

The set of all possible states determines the complexity of the black box. Thus, a system of ten factors at four levels can be in more than a million different states. Obviously, in such cases it is impossible to conduct a study that includes all possible experiments. Therefore, at the planning stage, the question of how many experiments and which ones are necessary to be carried out to solve the problem is decided.

It should be noted that the properties of the object of study are essential for the experiment. Firstly, we need to have information about the degree of reproducibility of the results of experiments with a given object. To do this, you can conduct an experiment, and then repeat it at irregular intervals and compare the results. If the spread of values does not exceed our requirements for the accuracy of the experiment, then the object satisfies the requirement of reproducibility of results. Another requirement for an object is its controllability. A controlled object is an object on which an active experiment can be conducted. In turn, an active experiment is an experiment during which the researcher has the opportunity to select the levels of factors of interest to him.

In practice, there are no fully managed objects. As mentioned above, a real object is affected by both controllable and uncontrollable factors, which leads to variability in results between individual objects. We can separate random changes from regular changes caused by different levels of independent variables only with the help of statistical methods.

But statistical methods are effective only under certain conditions. One of these conditions is the requirement of a certain minimum sample size used in the experiment. Obviously, the wider the range of changes in characteristics from object to object, the greater the repetition of the experiment, i.e. the number of experimental groups, should be.

Since an unreasonably large number of trials will make the study too expensive, and an insufficient sample size may compromise the accuracy of the conclusions, determining the required sample size plays a critical role in experimental design. Methods for calculating the minimum sample size are described in detail in the specialized literature, so it is not possible to present them in the article. However, it should be mentioned that they require a preliminary determination of the average value of the indicator under study and its error. The source of such information can be publications about similar studies. If they have not yet been carried out, then there is a need to perform a preliminary “pilot” study to assess the variability of the trait.

The next step in designing experiments is randomization. Randomization is a process used to group subjects so that each has an equal chance of being assigned to a control or treatment group. In other words, the selection of study participants must be random so that the study is not biased toward the researcher's “preferred” outcome.

Randomization helps prevent bias due to reasons that were not directly taken into account in the experimental design. For this purpose, for example, the formation of experimental groups of laboratory animals is carried out randomly. However, complete randomization is not always possible. Thus, clinical trials involve patients of a certain age group, with a predetermined diagnosis and severity of the disease, and, therefore, the selection of participants is not random. In addition, so-called “block” experimental designs limit randomization. These designs imply that selection into each block is carried out in accordance with certain non-random conditions, and random selection of study subjects is possible only within blocks. The randomization process is easy to implement using specialized statistical software or special tables.

In conclusion, it is necessary to say about the need to take into account in the research plan, in addition to the requirements of medicine and statistics, also moral and ethical standards. We should not forget that not only people, but also laboratory animals must be involved in the experiment in accordance with ethical principles.

When experimenting, even an experienced researcher is not guaranteed against errors and distortions of information. Some of them can be eliminated if you take a more careful approach to the design of the experiment. The other part cannot be eliminated in principle.” But taking into account this very possibility—the possibility of errors—allows us to make the necessary amendments.

First of all, something that in fact is not one can be mistakenly called an experiment. When conducting a parallel experiment, it is possible, for example, to change the wage system in one factory team, but not change it in another, and it may turn out that labor productivity has increased in the first team. However, this kind of situation will in no way be experimental unless some important characteristics of both groups are taken into account and control is established over them.

The experimental and control teams must be equal in size, type of activity, distribution of production functions, type of leadership or other characteristics important from the point of view of the hypothesis. If any important group properties cannot be equalized, you should try to somehow neutralize or fix them and take them into account when analyzing the results.

In cases where the sociologist does not do this, he is not in the mood to call the situation created experimental and to explain the change in productivity by a change in the wage system, since the change in productivity may be caused by any other random factor and not by the change; wages. Before calling a study experimental, the researcher must analyze whether he has a basis for this, in other words, whether he has created the necessary conditions and provided the necessary level of measurement and control.

When formulating a hypothesis and when transitioning from a general hypothesis to cooperational variables, errors may occur due to the logic of reasoning.

As a unifying reason when formulating a hypothesis: the identified mechanisms and connections may be erroneously identified. This usually happens when studying little-known phenomena, and then the negative results obtained in the experiment are a positive contribution to the development of a theoretical model of the object of observation, since they show that a given mechanism or connection does not determine the processes occurring.

Errors are possible when moving from the definition of a hypothetical

connection to the description of its empirical indicators. Poorly selected metrics will render an experiment of no value, no matter how carefully it was conducted. Errors are possible due to the subjective perception of the situation by both the experiment participants and the researcher. The experimenter often has a tendency to overestimate the impact of the variable under study, and this leads to the fact that he tends to interpret any ambiguous fact in the direction he desires.

Members of the experimental group also have the opportunity to subjectively interpret the situation: they can perceive certain features of the experimental situation in accordance with their own attitudes, and not in the meaning in which they appear to the experimenter. Such a discrepancy in perception, if it is not taken into account when planning an experiment, will certainly affect the analysis of the results and significantly reduce their reliability.

Weakening control and reducing the degree of “purity” of the experiment increases the possibility of the influence of additional variables or random factors, which cannot be taken into account or assessed at the end of the experiment. This, in turn, greatly reduces the reliability of the conclusions drawn.

An insufficiently experienced researcher faces dangers associated with the use of statistical methods. He may use methods that do not correspond to the research task. This possibility applies both to the construction of the experimental group and to the method of analyzing the results.

The use of experiment in sociology is associated with a number of difficulties that do not allow achieving the purity of a natural science experiment, since it is impossible to eliminate the influence of relationships that exist outside of what is being studied, it is impossible to control factors to the extent that is possible in a natural science experiment, or to repeat the course in the same form and results.

An experiment in sociology directly affects a specific person, and this also poses epic problems, naturally narrows the scope of the experiment and requires increased responsibility from the researcher.

Literature for additional reading

Lenin V. R, Great initiative. - Full. collection cit., vol. 39, p. 1-29.

Afanasyev V. G. Managing society as a sociological problem. - In the book: Scientific management of society. M.: Mysl, 1968, issue. 2, p. 218-219.

Meleva L. A., Sivokon P. E. Social experiment and its methodological foundations. M.: Znanie 1970. 48 p.

Kuznetsov V. P. Experiment as a method of transforming an object. - News. Moscow State University.

Ser. 7. Philosophy, 1975, No. 4, p. 3-10.

Kupriyan A. P. The problem of experiment in the system of social practice M. Nauka, 1981. 168 p.

Lectures on the methodology of specific social research / Ed. G. M. Andreeva. M.: Moscow State University Publishing House, 1972, p. 174-201.

Mikhailov S. Empirical sociological research. M.: Progress, 1975 p., 296-301.

Fundamentals of Marxist-Leninist sociology. M.: Progress, 1972, p. 103-108. Process of social research/Under general. ed. Yu. E. Volkova. M.: Progress 1975, sect. PD II.4.

Panto R., Grawitz M. Methods of social sciences. M.: Progress, 1972, pp. 557-562.

Richtarzhik K. Sociology on the paths of knowledge. M.: Progress, 1981, p. 89-112.

Ruzavin G. I. Methods of scientific research. M.: Mysl, 1974, p. 64-84.

Shtoff V. A. Introduction to the methodology of scientific knowledge. L.; Publishing house of Leningrad State University. 1972. 191 p.

Section four

Chapter XV discusses the main issues of statistical processing of experimental results: determining the most reliable value of the measured value and the error of this value based on several measurements, assessing the reliability of the difference between two close values, establishing a reliable functional relationship between two values and approximating this relationship.

The chapter is of an auxiliary nature. The material in it is presented in reference form, without evidence. A justification and more detailed description of the above methods is available, for example, in.

1. Experimental errors.

Numerical methods are often used in mathematical modeling of physical and other processes. The calculation results in this case are compared with experimental data and the quality of the chosen mathematical model is judged by the degree of their consistency. In order to reasonably make a conclusion about compliance or non-compliance, the calculator must know what the experimental error is and how it is handled, and also be able, if necessary, to carry out statistical processing of the raw experimental data.

In addition, the task of statistical processing of an experiment is of independent interest, since it is very important in those applications where either particularly high accuracy is required (for example, the adjustment of triangulation networks in geodesy), or the scatter of individual measurements exceeds the effect under study (which is often found in particle physics , chemistry of complex compounds, testing of agricultural varieties, medicine, etc.).

Typically, the more precise the experiment, the more complex equipment it requires and the more expensive it is. However, well-thought-out mathematical processing of results in some cases makes it possible to identify and partially eliminate measurement errors; this may be no less effective than using more expensive and accurate equipment. This chapter will discuss statistical processing that can significantly reduce and accurately estimate random measurement error.

Experimental errors are conventionally divided into systematic, random and gross; Let's look at them in more detail.

Systematic errors are those that do not change when a given experiment is repeated many times. Examples of such errors are neglecting the buoyant action of air when weighing accurately, or measuring current with a galvanometer whose zero is incorrectly set. There are three types of systematic errors.

a) Errors of a known nature, the magnitude of which can be determined; they are called amendments. So, with accurate weighing, a correction for the buoyant action of air is calculated and added to the measured value. Making amendments can significantly reduce (or even virtually eliminate) errors of this kind.

Note that sometimes calculating corrections can be an independent complex mathematical task. For example, the incorrectly posed problem (14.2) of restoring a transmitted radio signal from a received one is, in essence, finding a correction for the distortion of the receiving equipment.

b) Errors of known origin but unknown magnitude. These include the error of measuring instruments, determined by their accuracy class. For such errors, only the upper limit is usually known, and they cannot be taken into account as corrections.

c) Errors, the existence of which we do not know; for example, a device with a hidden defect or a worn one is used, the actual accuracy of which is significantly worse than indicated in the technical data sheet.

To identify systematic errors of all types, the equipment is usually debugged in advance on reference objects with well-known properties.

Random errors are caused by a large number of factors, which, when repeating the same experiment, can act differently, and it is almost impossible to take their influence into account. For example, when measuring the length of an object, the ruler may not be applied accurately, the observer's gaze may not fall perpendicular to the scale, etc.

If the experiment is repeated many times, the result due to random error will be different. However, such repetition and corresponding statistical processing make it possible, firstly, to determine the magnitude of the random error and, secondly, to reduce it. By repeating the measurement a sufficient number of times, it is possible to reduce the random error to the required value (it is advisable to reduce it to 50-100% of the systematic error).

Gross errors are the result of the inattentiveness of the observer, who may write down one digit instead of another.

With a single measurement, a gross error cannot always be identified. But if the measurement is repeated several times, then statistical processing determines the probable limits of random error. A measurement that significantly exceeds the obtained limits is considered grossly erroneous and is not taken into account in the final processing of the results.

Thus, if the measurement is repeated many times enough, gross and random errors can be practically eliminated, so that the accuracy of the answer will be determined only by the systematic error. However, in many applications this required number of times turns out to be unacceptably large, and with a realistic number of repetitions, random error can be decisive.